We're trying something different this week: a full post-show breakdown of every episode in the latest season of Black Mirror! Ari Romero is joined by Tudum's Black Mirror expert, Keisha Hatchett, to give you all the nuance, the insider commentary, and the details you might have missed in this incredible new season. Plus commentary from creator & showrunner Charlie Brooker! SPOILER ALERT: We're talking about the new season in detail and revealing key plot points. If you haven't watched yet, and you don't want to know what happens, turn back now! You can watch all seven seasons of Black Mirror now in your personalized virtual theater . Follow Netflix Podcasts and read more about Black Mirror on Tudum.com .…

Player FM - Internet Radio Done Right

12 subscribers

Checked 2d ago

เพิ่มแล้วเมื่อ threeปีที่ผ่านมา

เนื้อหาจัดทำโดย LessWrong เนื้อหาพอดแคสต์ทั้งหมด รวมถึงตอน กราฟิก และคำอธิบายพอดแคสต์ได้รับการอัปโหลดและจัดหาให้โดยตรงจาก LessWrong หรือพันธมิตรแพลตฟอร์มพอดแคสต์ของพวกเขา หากคุณเชื่อว่ามีบุคคลอื่นใช้งานที่มีลิขสิทธิ์ของคุณโดยไม่ได้รับอนุญาต คุณสามารถปฏิบัติตามขั้นตอนที่แสดงไว้ที่นี่ https://th.player.fm/legal

Player FM - แอป Podcast

ออฟไลน์ด้วยแอป Player FM !

ออฟไลน์ด้วยแอป Player FM !

“You are not too ‘irrational’ to know your preferences.” by DaystarEld

Manage episode 452236242 series 3364758

เนื้อหาจัดทำโดย LessWrong เนื้อหาพอดแคสต์ทั้งหมด รวมถึงตอน กราฟิก และคำอธิบายพอดแคสต์ได้รับการอัปโหลดและจัดหาให้โดยตรงจาก LessWrong หรือพันธมิตรแพลตฟอร์มพอดแคสต์ของพวกเขา หากคุณเชื่อว่ามีบุคคลอื่นใช้งานที่มีลิขสิทธิ์ของคุณโดยไม่ได้รับอนุญาต คุณสามารถปฏิบัติตามขั้นตอนที่แสดงไว้ที่นี่ https://th.player.fm/legal

Epistemic Status: 13 years working as a therapist for a wide variety of populations, 5 of them working with rationalists and EA clients. 7 years teaching and directing at over 20 rationality camps and workshops. This is an extremely short and colloquially written form of points that could be expanded on to fill a book, and there is plenty of nuance to practically everything here, but I am extremely confident of the core points in this frame, and have used it to help many people break out of or avoid manipulative practices.

TL;DR: Your wants and preferences are not invalidated by smarter or more “rational” people's preferences. What feels good or bad to someone is not a monocausal result of how smart or stupid they are.

Alternative titles to this post are "Two people are enough to form a cult" and "Red flags if dating rationalists," but this [...]

---

Outline:

(02:53) 1) You are not too stupid to know what you want.

(07:34) 2) Feeling hurt is not a sign of irrationality.

(13:15) 3) Illegible preferences are not invalid.

(17:22) 4) Your preferences do not need to fully match your communitys.

(21:43) Final Thoughts

The original text contained 3 footnotes which were omitted from this narration.

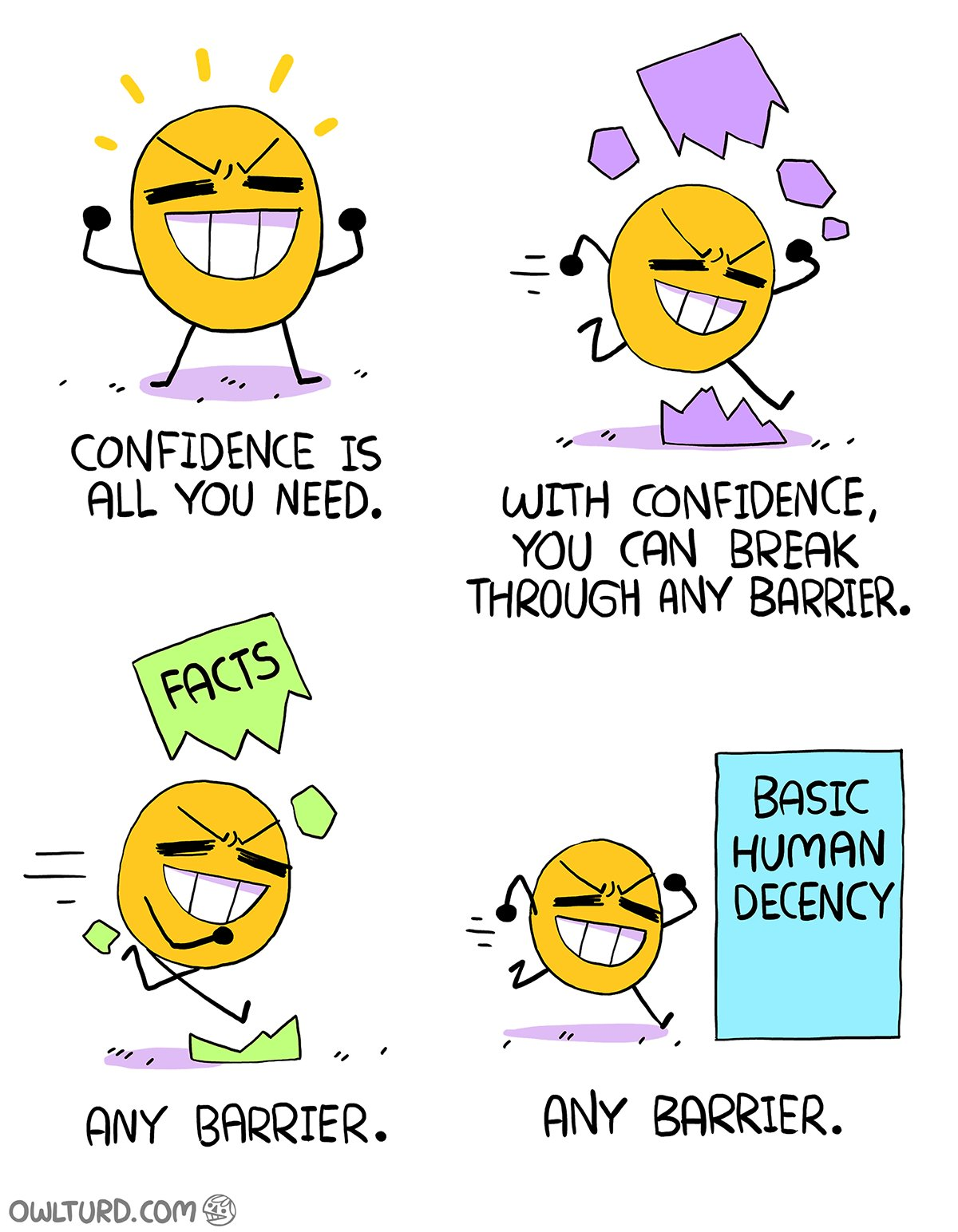

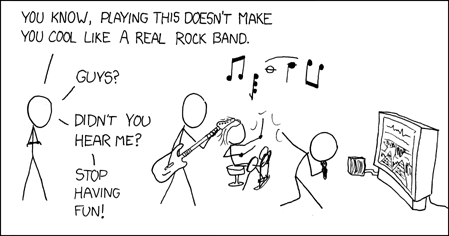

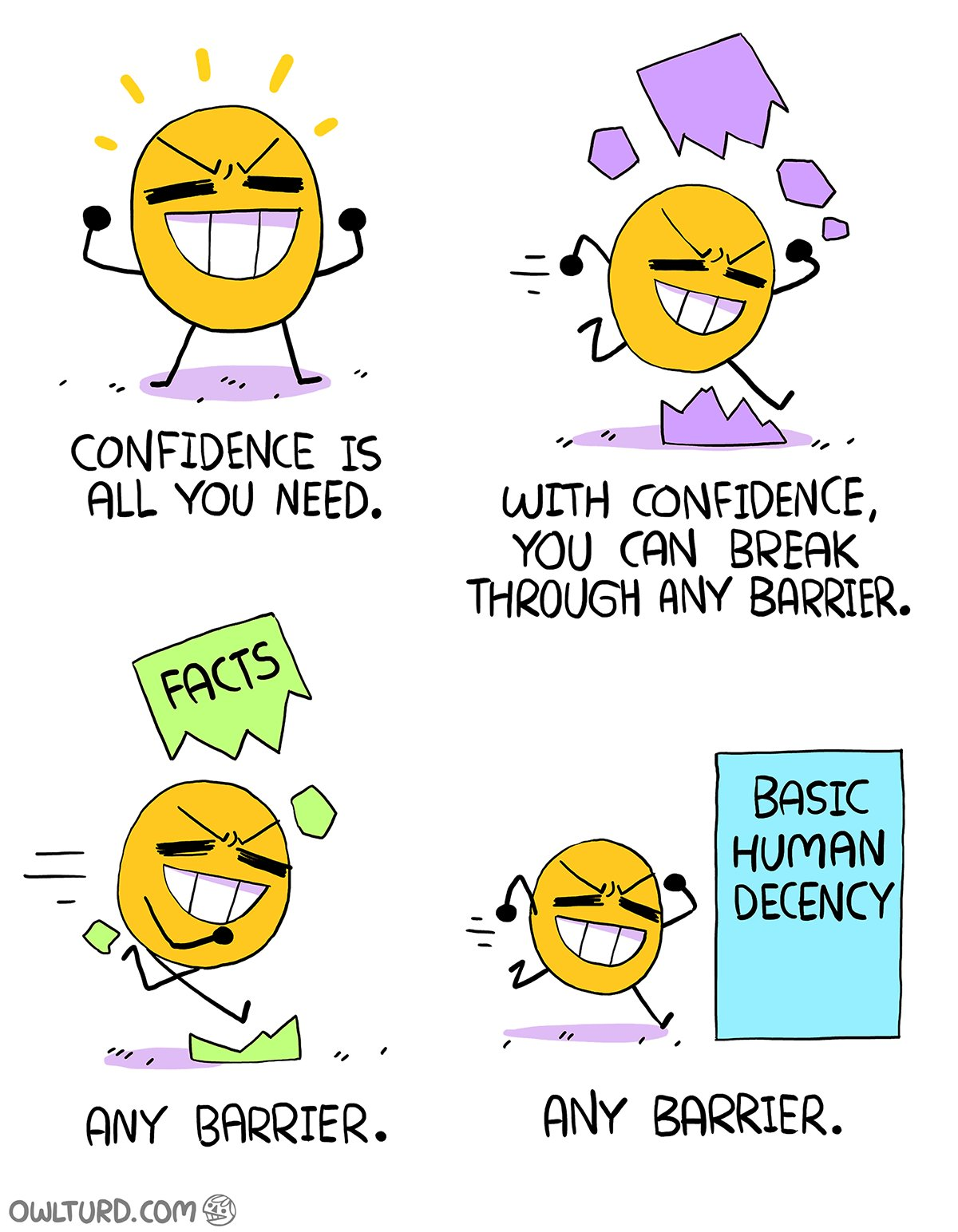

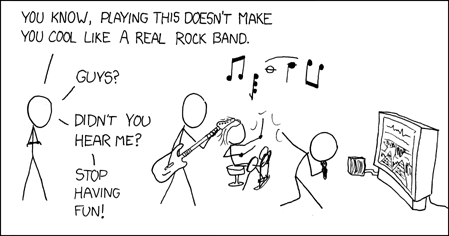

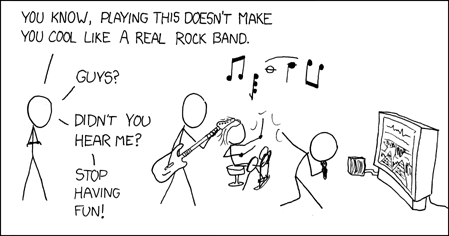

The original text contained 2 images which were described by AI.

---

First published:

November 26th, 2024

Source:

https://www.lesswrong.com/posts/LifRBXdenQDiX4cu8/you-are-not-too-irrational-to-know-your-preferences-1

---

Narrated by TYPE III AUDIO.

---

…

continue reading

TL;DR: Your wants and preferences are not invalidated by smarter or more “rational” people's preferences. What feels good or bad to someone is not a monocausal result of how smart or stupid they are.

Alternative titles to this post are "Two people are enough to form a cult" and "Red flags if dating rationalists," but this [...]

---

Outline:

(02:53) 1) You are not too stupid to know what you want.

(07:34) 2) Feeling hurt is not a sign of irrationality.

(13:15) 3) Illegible preferences are not invalid.

(17:22) 4) Your preferences do not need to fully match your communitys.

(21:43) Final Thoughts

The original text contained 3 footnotes which were omitted from this narration.

The original text contained 2 images which were described by AI.

---

First published:

November 26th, 2024

Source:

https://www.lesswrong.com/posts/LifRBXdenQDiX4cu8/you-are-not-too-irrational-to-know-your-preferences-1

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.505 ตอน

Manage episode 452236242 series 3364758

เนื้อหาจัดทำโดย LessWrong เนื้อหาพอดแคสต์ทั้งหมด รวมถึงตอน กราฟิก และคำอธิบายพอดแคสต์ได้รับการอัปโหลดและจัดหาให้โดยตรงจาก LessWrong หรือพันธมิตรแพลตฟอร์มพอดแคสต์ของพวกเขา หากคุณเชื่อว่ามีบุคคลอื่นใช้งานที่มีลิขสิทธิ์ของคุณโดยไม่ได้รับอนุญาต คุณสามารถปฏิบัติตามขั้นตอนที่แสดงไว้ที่นี่ https://th.player.fm/legal

Epistemic Status: 13 years working as a therapist for a wide variety of populations, 5 of them working with rationalists and EA clients. 7 years teaching and directing at over 20 rationality camps and workshops. This is an extremely short and colloquially written form of points that could be expanded on to fill a book, and there is plenty of nuance to practically everything here, but I am extremely confident of the core points in this frame, and have used it to help many people break out of or avoid manipulative practices.

TL;DR: Your wants and preferences are not invalidated by smarter or more “rational” people's preferences. What feels good or bad to someone is not a monocausal result of how smart or stupid they are.

Alternative titles to this post are "Two people are enough to form a cult" and "Red flags if dating rationalists," but this [...]

---

Outline:

(02:53) 1) You are not too stupid to know what you want.

(07:34) 2) Feeling hurt is not a sign of irrationality.

(13:15) 3) Illegible preferences are not invalid.

(17:22) 4) Your preferences do not need to fully match your communitys.

(21:43) Final Thoughts

The original text contained 3 footnotes which were omitted from this narration.

The original text contained 2 images which were described by AI.

---

First published:

November 26th, 2024

Source:

https://www.lesswrong.com/posts/LifRBXdenQDiX4cu8/you-are-not-too-irrational-to-know-your-preferences-1

---

Narrated by TYPE III AUDIO.

---

…

continue reading

TL;DR: Your wants and preferences are not invalidated by smarter or more “rational” people's preferences. What feels good or bad to someone is not a monocausal result of how smart or stupid they are.

Alternative titles to this post are "Two people are enough to form a cult" and "Red flags if dating rationalists," but this [...]

---

Outline:

(02:53) 1) You are not too stupid to know what you want.

(07:34) 2) Feeling hurt is not a sign of irrationality.

(13:15) 3) Illegible preferences are not invalid.

(17:22) 4) Your preferences do not need to fully match your communitys.

(21:43) Final Thoughts

The original text contained 3 footnotes which were omitted from this narration.

The original text contained 2 images which were described by AI.

---

First published:

November 26th, 2024

Source:

https://www.lesswrong.com/posts/LifRBXdenQDiX4cu8/you-are-not-too-irrational-to-know-your-preferences-1

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.505 ตอน

ทุกตอน

×This is a link post. to follow up my philantropic pledge from 2020, i've updated my philanthropy page with the 2024 results. in 2024 my donations funded $51M worth of endpoint grants (plus $2.0M in admin overhead and philanthropic software development). this comfortably exceeded my 2024 commitment of $42M (20k times $2100.00 — the minimum price of ETH in 2024). this also concludes my 5-year donation pledge, but of course my philanthropy continues: eg, i’ve already made over $4M in endpoint grants in the first quarter of 2025 (not including 2024 grants that were slow to disburse), as well as pledged at least $10M to the 2025 SFF grant round. --- First published: April 23rd, 2025 Source: https://www.lesswrong.com/posts/8ojWtREJjKmyvWdDb/jaan-tallinn-s-2024-philanthropy-overview Linkpost URL: https://jaan.info/philanthropy/#2024-results --- Narrated by TYPE III AUDIO .…

I’ve been thinking recently about what sets apart the people who’ve done the best work at Anthropic. You might think that the main thing that makes people really effective at research or engineering is technical ability, and among the general population that's true. Among people hired at Anthropic, though, we’ve restricted the range by screening for extremely high-percentile technical ability, so the remaining differences, while they still matter, aren’t quite as critical. Instead, people's biggest bottleneck eventually becomes their ability to get leverage—i.e., to find and execute work that has a big impact-per-hour multiplier. For example, here are some types of work at Anthropic that tend to have high impact-per-hour, or a high impact-per-hour ceiling when done well (of course this list is extremely non-exhaustive!): Improving tooling, documentation, or dev loops. A tiny amount of time fixing a papercut in the right way can save [...] --- Outline: (03:28) 1. Agency (03:31) Understand and work backwards from the root goal (05:02) Don't rely too much on permission or encouragement (07:49) Make success inevitable (09:28) 2. Taste (09:31) Find your angle (11:03) Think real hard (13:03) Reflect on your thinking --- First published: April 19th, 2025 Source: https://www.lesswrong.com/posts/DiJT4qJivkjrGPFi8/impact-agency-and-taste --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

1 [Linkpost] “To Understand History, Keep Former Population Distributions In Mind” by Arjun Panickssery 5:42

This is a link post. Guillaume Blanc has a piece in Works in Progress (I assume based on his paper) about how France's fertility declined earlier than in other European countries, and how its power waned as its relative population declined starting in the 18th century. In 1700, France had 20% of Europe's population (4% of the whole world population). Kissinger writes in Diplomacy with respect to the Versailles Peace Conference: Victory brought home to France the stark realization that revanche had cost it too dearly, and that it had been living off capital for nearly a century. France alone knew just how weak it had become in comparison with Germany, though nobody else, especially not America, was prepared to believe it ... Though France's allies insisted that its fears were exaggerated, French leaders knew better. In 1880, the French had represented 15.7 percent of Europe's population. By 1900, that [...] --- First published: April 23rd, 2025 Source: https://www.lesswrong.com/posts/gk2aJgg7yzzTXp8HJ/to-understand-history-keep-former-population-distributions Linkpost URL: https://arjunpanickssery.substack.com/p/to-understand-history-keep-former --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

L

LessWrong (Curated & Popular)

1 “AI-enabled coups: a small group could use AI to seize power” by Tom Davidson, Lukas Finnveden, rosehadshar 15:22

We’ve written a new report on the threat of AI-enabled coups. I think this is a very serious risk – comparable in importance to AI takeover but much more neglected. In fact, AI-enabled coups and AI takeover have pretty similar threat models. To see this, here's a very basic threat model for AI takeover: Humanity develops superhuman AI Superhuman AI is misaligned and power-seeking Superhuman AI seizes power for itself And now here's a closely analogous threat model for AI-enabled coups: Humanity develops superhuman AI Superhuman AI is controlled by a small group Superhuman AI seizes power for the small group While the report focuses on the risk that someone seizes power over a country, I think that similar dynamics could allow someone to take over the world. In fact, if someone wanted to take over the world, their best strategy might well be to first stage an AI-enabled [...] --- Outline: (02:39) Summary (03:31) An AI workforce could be made singularly loyal to institutional leaders (05:04) AI could have hard-to-detect secret loyalties (06:46) A few people could gain exclusive access to coup-enabling AI capabilities (09:46) Mitigations (13:00) Vignette The original text contained 2 footnotes which were omitted from this narration. --- First published: April 16th, 2025 Source: https://www.lesswrong.com/posts/6kBMqrK9bREuGsrnd/ai-enabled-coups-a-small-group-could-use-ai-to-seize-power-1 --- Narrated by TYPE III AUDIO . --- Images from the article:…

Back in the 1990s, ground squirrels were briefly fashionable pets, but their popularity came to an abrupt end after an incident at Schiphol Airport on the outskirts of Amsterdam. In April 1999, a cargo of 440 of the rodents arrived on a KLM flight from Beijing, without the necessary import papers. Because of this, they could not be forwarded on to the customer in Athens. But nobody was able to correct the error and send them back either. What could be done with them? It's hard to think there wasn’t a better solution than the one that was carried out; faced with the paperwork issue, airport staff threw all 440 squirrels into an industrial shredder. [...] It turned out that the order to destroy the squirrels had come from the Dutch government's Department of Agriculture, Environment Management and Fishing. However, KLM's management, with the benefit of hindsight, said that [...] --- First published: April 22nd, 2025 Source: https://www.lesswrong.com/posts/nYJaDnGNQGiaCBSB5/accountability-sinks --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

L

LessWrong (Curated & Popular)

Subtitle: Bad for loss of control risks, bad for concentration of power risks I’ve had this sitting in my drafts for the last year. I wish I’d been able to release it sooner, but on the bright side, it’ll make a lot more sense to people who have already read AI 2027. There's a good chance that AGI will be trained before this decade is out. By AGI I mean “An AI system at least as good as the best human X’ers, for all cognitive tasks/skills/jobs X.” Many people seem to be dismissing this hypothesis ‘on priors’ because it sounds crazy. But actually, a reasonable prior should conclude that this is plausible.[1] For more on what this means, what it might look like, and why it's plausible, see AI 2027, especially the Research section. If so, by default the existence of AGI will be a closely guarded [...] The original text contained 8 footnotes which were omitted from this narration. --- First published: April 18th, 2025 Source: https://www.lesswrong.com/posts/FGqfdJmB8MSH5LKGc/training-agi-in-secret-would-be-unsafe-and-unethical-1 --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

Though, given my doomerism, I think the natsec framing of the AGI race is likely wrongheaded, let me accept the Dario/Leopold/Altman frame that AGI will be aligned to the national interest of a great power. These people seem to take as an axiom that a USG AGI will be better in some way than CCP AGI. Has anyone written justification for this assumption? I am neither an American citizen nor a Chinese citizen. What would it mean for an AGI to be aligned with "Democracy" or "Confucianism" or "Marxism with Chinese characteristics" or "the American constitution" Contingent on a world where such an entity exists and is compatible with my existence, what would my life be as a non-citizen in each system? Why should I expect USG AGI to be better than CCP AGI? --- First published: April 19th, 2025 Source: https://www.lesswrong.com/posts/MKS4tJqLWmRXgXzgY/why-should-i-assume-ccp-agi-is-worse-than-usg-agi-1 --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

1 “Surprising LLM reasoning failures make me think we still need qualitative breakthroughs for AGI” by Kaj_Sotala 35:51

Introduction Writing this post puts me in a weird epistemic position. I simultaneously believe that: The reasoning failures that I'll discuss are strong evidence that current LLM- or, more generally, transformer-based approaches won't get us AGI As soon as major AI labs read about the specific reasoning failures described here, they might fix them But future versions of GPT, Claude etc. succeeding at the tasks I've described here will provide zero evidence of their ability to reach AGI. If someone makes a future post where they report that they tested an LLM on all the specific things I described here it aced all of them, that will not update my position at all. That is because all of the reasoning failures that I describe here are surprising in the sense that given everything else that they can do, you’d expect LLMs to succeed at all of these tasks. The [...] --- Outline: (00:13) Introduction (02:13) Reasoning failures (02:17) Sliding puzzle problem (07:17) Simple coaching instructions (09:22) Repeatedly failing at tic-tac-toe (10:48) Repeatedly offering an incorrect fix (13:48) Various people's simple tests (15:06) Various failures at logic and consistency while writing fiction (15:21) Inability to write young characters when first prompted (17:12) Paranormal posers (19:12) Global details replacing local ones (20:19) Stereotyped behaviors replacing character-specific ones (21:21) Top secret marine databases (23:32) Wandering items (23:53) Sycophancy (24:49) What's going on here? (32:18) How about scaling? Or reasoning models? --- First published: April 15th, 2025 Source: https://www.lesswrong.com/posts/sgpCuokhMb8JmkoSn/untitled-draft-7shu --- Narrated by TYPE III AUDIO . --- Images from the article:…

L

LessWrong (Curated & Popular)

1 “Frontier AI Models Still Fail at Basic Physical Tasks: A Manufacturing Case Study” by Adam Karvonen 21:00

Dario Amodei, CEO of Anthropic, recently worried about a world where only 30% of jobs become automated, leading to class tensions between the automated and non-automated. Instead, he predicts that nearly all jobs will be automated simultaneously, putting everyone "in the same boat." However, based on my experience spanning AI research (including first author papers at COLM / NeurIPS and attending MATS under Neel Nanda), robotics, and hands-on manufacturing (including machining prototype rocket engine parts for Blue Origin and Ursa Major), I see a different near-term future. Since the GPT-4 release, I've evaluated frontier models on a basic manufacturing task, which tests both visual perception and physical reasoning. While Gemini 2.5 Pro recently showed progress on the visual front, all models tested continue to fail significantly on physical reasoning. They still perform terribly overall. Because of this, I think that there will be an interim period where a significant [...] --- Outline: (01:28) The Evaluation (02:29) Visual Errors (04:03) Physical Reasoning Errors (06:09) Why do LLM's struggle with physical tasks? (07:37) Improving on physical tasks may be difficult (10:14) Potential Implications of Uneven Automation (11:48) Conclusion (12:24) Appendix (12:44) Visual Errors (14:36) Physical Reasoning Errors --- First published: April 14th, 2025 Source: https://www.lesswrong.com/posts/r3NeiHAEWyToers4F/frontier-ai-models-still-fail-at-basic-physical-tasks-a --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

L

LessWrong (Curated & Popular)

1 “Negative Results for SAEs On Downstream Tasks and Deprioritising SAE Research (GDM Mech Interp Team Progress Update #2)” by Neel Nanda, lewis smith, Senthooran Rajamanoharan, Arthur Conmy, Callum… 57:32

Audio note: this article contains 31 uses of latex notation, so the narration may be difficult to follow. There's a link to the original text in the episode description. Lewis Smith*, Sen Rajamanoharan*, Arthur Conmy, Callum McDougall, Janos Kramar, Tom Lieberum, Rohin Shah, Neel Nanda * = equal contribution The following piece is a list of snippets about research from the GDM mechanistic interpretability team, which we didn’t consider a good fit for turning into a paper, but which we thought the community might benefit from seeing in this less formal form. These are largely things that we found in the process of a project investigating whether sparse autoencoders were useful for downstream tasks, notably out-of-distribution probing. TL;DR To validate whether SAEs were a worthwhile technique, we explored whether they were useful on the downstream task of OOD generalisation when detecting harmful intent in user prompts [...] --- Outline: (01:08) TL;DR (02:38) Introduction (02:41) Motivation (06:09) Our Task (08:35) Conclusions and Strategic Updates (13:59) Comparing different ways to train Chat SAEs (18:30) Using SAEs for OOD Probing (20:21) Technical Setup (20:24) Datasets (24:16) Probing (26:48) Results (30:36) Related Work and Discussion (34:01) Is it surprising that SAEs didn't work? (39:54) Dataset debugging with SAEs (42:02) Autointerp and high frequency latents (44:16) Removing High Frequency Latents from JumpReLU SAEs (45:04) Method (45:07) Motivation (47:29) Modifying the sparsity penalty (48:48) How we evaluated interpretability (50:36) Results (51:18) Reconstruction loss at fixed sparsity (52:10) Frequency histograms (52:52) Latent interpretability (54:23) Conclusions (56:43) Appendix The original text contained 7 footnotes which were omitted from this narration. --- First published: March 26th, 2025 Source: https://www.lesswrong.com/posts/4uXCAJNuPKtKBsi28/sae-progress-update-2-draft --- Narrated by TYPE III AUDIO . --- Images from the article:…

This is a link post. When I was a really small kid, one of my favorite activities was to try and dam up the creek in my backyard. I would carefully move rocks into high walls, pile up leaves, or try patching the holes with sand. The goal was just to see how high I could get the lake, knowing that if I plugged every hole, eventually the water would always rise and defeat my efforts. Beaver behaviour. One day, I had the realization that there was a simpler approach. I could just go get a big 5 foot long shovel, and instead of intricately locking together rocks and leaves and sticks, I could collapse the sides of the riverbank down and really build a proper big dam. I went to ask my dad for the shovel to try this out, and he told me, very heavily paraphrasing, 'Congratulations. You've [...] --- First published: April 10th, 2025 Source: https://www.lesswrong.com/posts/rLucLvwKoLdHSBTAn/playing-in-the-creek Linkpost URL: https://hgreer.com/PlayingInTheCreek --- Narrated by TYPE III AUDIO .…

This is part of the MIRI Single Author Series. Pieces in this series represent the beliefs and opinions of their named authors, and do not claim to speak for all of MIRI. Okay, I'm annoyed at people covering AI 2027 burying the lede, so I'm going to try not to do that. The authors predict a strong chance that all humans will be (effectively) dead in 6 years, and this agrees with my best guess about the future. (My modal timeline has loss of control of Earth mostly happening in 2028, rather than late 2027, but nitpicking at that scale hardly matters.) Their timeline to transformative AI also seems pretty close to the perspective of frontier lab CEO's (at least Dario Amodei, and probably Sam Altman) and the aggregate market opinion of both Metaculus and Manifold! If you look on those market platforms you get graphs like this: Both [...] --- Outline: (02:23) Mode ≠ Median (04:50) Theres a Decent Chance of Having Decades (06:44) More Thoughts (08:55) Mid 2025 (09:01) Late 2025 (10:42) Early 2026 (11:18) Mid 2026 (12:58) Late 2026 (13:04) January 2027 (13:26) February 2027 (14:53) March 2027 (16:32) April 2027 (16:50) May 2027 (18:41) June 2027 (19:03) July 2027 (20:27) August 2027 (22:45) September 2027 (24:37) October 2027 (26:14) November 2027 (Race) (29:08) December 2027 (Race) (30:53) 2028 and Beyond (Race) (34:42) Thoughts on Slowdown (38:27) Final Thoughts --- First published: April 9th, 2025 Source: https://www.lesswrong.com/posts/Yzcb5mQ7iq4DFfXHx/thoughts-on-ai-2027 --- Narrated by TYPE III AUDIO . --- Images from the article: Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts , or another podcast app.…

L

LessWrong (Curated & Popular)

Short AI takeoff timelines seem to leave no time for some lines of alignment research to become impactful. But any research rebalances the mix of currently legible research directions that could be handed off to AI-assisted alignment researchers or early autonomous AI researchers whenever they show up. So even hopelessly incomplete research agendas could still be used to prompt future capable AI to focus on them, while in the absence of such incomplete research agendas we'd need to rely on AI's judgment more completely. This doesn't crucially depend on giving significant probability to long AI takeoff timelines, or on expected value in such scenarios driving the priorities. Potential for AI to take up the torch makes it reasonable to still prioritize things that have no hope at all of becoming practical for decades (with human effort). How well AIs can be directed to advance a line of research [...] --- First published: April 9th, 2025 Source: https://www.lesswrong.com/posts/3NdpbA6M5AM2gHvTW/short-timelines-don-t-devalue-long-horizon-research --- Narrated by TYPE III AUDIO .…

L

LessWrong (Curated & Popular)

1 “Alignment Faking Revisited: Improved Classifiers and Open Source Extensions” by John Hughes, abhayesian, Akbir Khan, Fabien Roger 41:04

In this post, we present a replication and extension of an alignment faking model organism: Replication: We replicate the alignment faking (AF) paper and release our code. Classifier Improvements: We significantly improve the precision and recall of the AF classifier. We release a dataset of ~100 human-labelled examples of AF for which our classifier achieves an AUROC of 0.9 compared to 0.6 from the original classifier. Evaluating More Models: We find Llama family models, other open source models, and GPT-4o do not AF in the prompted-only setting when evaluating using our new classifier (other than a single instance with Llama 3 405B). Extending SFT Experiments: We run supervised fine-tuning (SFT) experiments on Llama (and GPT4o) and find that AF rate increases with scale. We release the fine-tuned models on Huggingface and scripts. Alignment faking on 70B: We find that Llama 70B alignment fakes when both using the system prompt in the [...] --- Outline: (02:43) Method (02:46) Overview of the Alignment Faking Setup (04:22) Our Setup (06:02) Results (06:05) Improving Alignment Faking Classification (10:56) Replication of Prompted Experiments (14:02) Prompted Experiments on More Models (16:35) Extending Supervised Fine-Tuning Experiments to Open-Source Models and GPT-4o (23:13) Next Steps (25:02) Appendix (25:05) Appendix A: Classifying alignment faking (25:17) Criteria in more depth (27:40) False positives example 1 from the old classifier (30:11) False positives example 2 from the old classifier (32:06) False negative example 1 from the old classifier (35:00) False negative example 2 from the old classifier (36:56) Appendix B: Classifier ROC on other models (37:24) Appendix C: User prompt suffix ablation (40:24) Appendix D: Longer training of baseline docs --- First published: April 8th, 2025 Source: https://www.lesswrong.com/posts/Fr4QsQT52RFKHvCAH/alignment-faking-revisited-improved-classifiers-and-open --- Narrated by TYPE III AUDIO . --- Images from the article:…

L

LessWrong (Curated & Popular)

Summary: We propose measuring AI performance in terms of the length of tasks AI agents can complete. We show that this metric has been consistently exponentially increasing over the past 6 years, with a doubling time of around 7 months. Extrapolating this trend predicts that, in under five years, we will see AI agents that can independently complete a large fraction of software tasks that currently take humans days or weeks. The length of tasks (measured by how long they take human professionals) that generalist frontier model agents can complete autonomously with 50% reliability has been doubling approximately every 7 months for the last 6 years. The shaded region represents 95% CI calculated by hierarchical bootstrap over task families, tasks, and task attempts. Full paper | Github repo We think that forecasting the capabilities of future AI systems is important for understanding and preparing for the impact of [...] --- Outline: (08:58) Conclusion (09:59) Want to contribute? --- First published: March 19th, 2025 Source: https://www.lesswrong.com/posts/deesrjitvXM4xYGZd/metr-measuring-ai-ability-to-complete-long-tasks --- Narrated by TYPE III AUDIO . --- Images from the article:…

ขอต้อนรับสู่ Player FM!

Player FM กำลังหาเว็บ