The award-winning WIRED UK Podcast with James Temperton and the rest of the team. Listen every week for the an informed and entertaining rundown of latest technology, science, business and culture news. New episodes every Friday.

…

continue reading

เนื้อหาจัดทำโดย LessWrong เนื้อหาพอดแคสต์ทั้งหมด รวมถึงตอน กราฟิก และคำอธิบายพอดแคสต์ได้รับการอัปโหลดและจัดหาให้โดยตรงจาก LessWrong หรือพันธมิตรแพลตฟอร์มพอดแคสต์ของพวกเขา หากคุณเชื่อว่ามีบุคคลอื่นใช้งานที่มีลิขสิทธิ์ของคุณโดยไม่ได้รับอนุญาต คุณสามารถปฏิบัติตามขั้นตอนที่แสดงไว้ที่นี่ https://th.player.fm/legal

Player FM - แอป Podcast

ออฟไลน์ด้วยแอป Player FM !

ออฟไลน์ด้วยแอป Player FM !

“Anthropic is (probably) not meeting its RSP security commitments” by habryka

Manage episode 520491236 series 3364760

เนื้อหาจัดทำโดย LessWrong เนื้อหาพอดแคสต์ทั้งหมด รวมถึงตอน กราฟิก และคำอธิบายพอดแคสต์ได้รับการอัปโหลดและจัดหาให้โดยตรงจาก LessWrong หรือพันธมิตรแพลตฟอร์มพอดแคสต์ของพวกเขา หากคุณเชื่อว่ามีบุคคลอื่นใช้งานที่มีลิขสิทธิ์ของคุณโดยไม่ได้รับอนุญาต คุณสามารถปฏิบัติตามขั้นตอนที่แสดงไว้ที่นี่ https://th.player.fm/legal

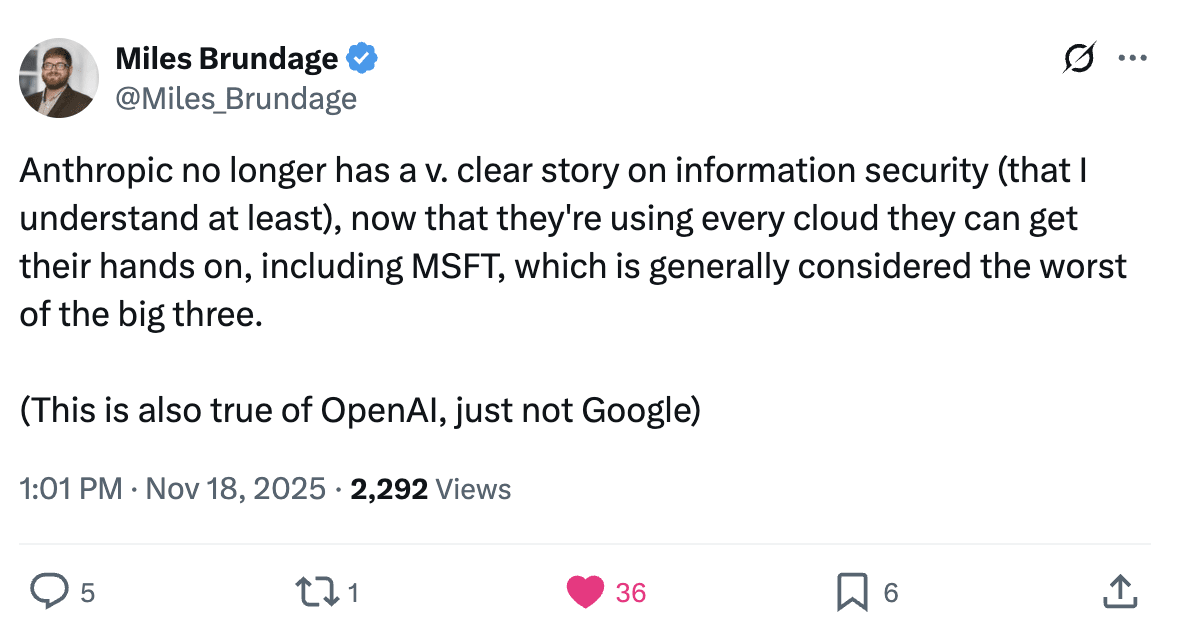

TLDR: An AI company's model weight security is at most as good as its compute providers' security. Anthropic has committed (with a bit of ambiguity, but IMO not that much ambiguity) to be robust to attacks from corporate espionage teams at companies where it hosts its weights. Anthropic seems unlikely to be robust to those attacks. Hence they are in violation of their RSP.

Anthropic is committed to being robust to attacks from corporate espionage teams (which includes corporate espionage teams at Google, Microsoft and Amazon)

From the Anthropic RSP:

When a model must meet the ASL-3 Security Standard, we will evaluate whether the measures we have implemented make us highly protected against most attackers’ attempts at stealing model weights.

We consider the following groups in scope: hacktivists, criminal hacker groups, organized cybercrime groups, terrorist organizations, corporate espionage teams, internal employees, and state-sponsored programs that use broad-based and non-targeted techniques (i.e., not novel attack chains).

[...]

We will implement robust controls to mitigate basic insider risk, but consider mitigating risks from sophisticated or state-compromised insiders to be out of scope for ASL-3. We define “basic insider risk” as risk from an insider who does not have persistent or time-limited [...]

---

Outline:

(00:37) Anthropic is committed to being robust to attacks from corporate espionage teams (which includes corporate espionage teams at Google, Microsoft and Amazon)

(03:40) Claude weights that are covered by ASL-3 security requirements are shipped to many Amazon, Google, and Microsoft data centers

(04:55) This means given executive buy-in by a high-level Amazon, Microsoft or Google executive, their corporate espionage team would have virtually unlimited physical access to Claude inference machines that host copies of the weights

(05:36) With unlimited physical access, a competent corporate espionage team at Amazon, Microsoft or Google could extract weights from an inference machine, without too much difficulty

(06:18) Given all of the above, this means Anthropic is in violation of its most recent RSP

(07:05) Postscript

---

First published:

November 18th, 2025

Source:

https://www.lesswrong.com/posts/zumPKp3zPDGsppFcF/anthropic-is-probably-not-meeting-its-rsp-security

---

Narrated by TYPE III AUDIO.

---

…

continue reading

Anthropic is committed to being robust to attacks from corporate espionage teams (which includes corporate espionage teams at Google, Microsoft and Amazon)

From the Anthropic RSP:

When a model must meet the ASL-3 Security Standard, we will evaluate whether the measures we have implemented make us highly protected against most attackers’ attempts at stealing model weights.

We consider the following groups in scope: hacktivists, criminal hacker groups, organized cybercrime groups, terrorist organizations, corporate espionage teams, internal employees, and state-sponsored programs that use broad-based and non-targeted techniques (i.e., not novel attack chains).

[...]

We will implement robust controls to mitigate basic insider risk, but consider mitigating risks from sophisticated or state-compromised insiders to be out of scope for ASL-3. We define “basic insider risk” as risk from an insider who does not have persistent or time-limited [...]

---

Outline:

(00:37) Anthropic is committed to being robust to attacks from corporate espionage teams (which includes corporate espionage teams at Google, Microsoft and Amazon)

(03:40) Claude weights that are covered by ASL-3 security requirements are shipped to many Amazon, Google, and Microsoft data centers

(04:55) This means given executive buy-in by a high-level Amazon, Microsoft or Google executive, their corporate espionage team would have virtually unlimited physical access to Claude inference machines that host copies of the weights

(05:36) With unlimited physical access, a competent corporate espionage team at Amazon, Microsoft or Google could extract weights from an inference machine, without too much difficulty

(06:18) Given all of the above, this means Anthropic is in violation of its most recent RSP

(07:05) Postscript

---

First published:

November 18th, 2025

Source:

https://www.lesswrong.com/posts/zumPKp3zPDGsppFcF/anthropic-is-probably-not-meeting-its-rsp-security

---

Narrated by TYPE III AUDIO.

---

682 ตอน

Manage episode 520491236 series 3364760

เนื้อหาจัดทำโดย LessWrong เนื้อหาพอดแคสต์ทั้งหมด รวมถึงตอน กราฟิก และคำอธิบายพอดแคสต์ได้รับการอัปโหลดและจัดหาให้โดยตรงจาก LessWrong หรือพันธมิตรแพลตฟอร์มพอดแคสต์ของพวกเขา หากคุณเชื่อว่ามีบุคคลอื่นใช้งานที่มีลิขสิทธิ์ของคุณโดยไม่ได้รับอนุญาต คุณสามารถปฏิบัติตามขั้นตอนที่แสดงไว้ที่นี่ https://th.player.fm/legal

TLDR: An AI company's model weight security is at most as good as its compute providers' security. Anthropic has committed (with a bit of ambiguity, but IMO not that much ambiguity) to be robust to attacks from corporate espionage teams at companies where it hosts its weights. Anthropic seems unlikely to be robust to those attacks. Hence they are in violation of their RSP.

Anthropic is committed to being robust to attacks from corporate espionage teams (which includes corporate espionage teams at Google, Microsoft and Amazon)

From the Anthropic RSP:

When a model must meet the ASL-3 Security Standard, we will evaluate whether the measures we have implemented make us highly protected against most attackers’ attempts at stealing model weights.

We consider the following groups in scope: hacktivists, criminal hacker groups, organized cybercrime groups, terrorist organizations, corporate espionage teams, internal employees, and state-sponsored programs that use broad-based and non-targeted techniques (i.e., not novel attack chains).

[...]

We will implement robust controls to mitigate basic insider risk, but consider mitigating risks from sophisticated or state-compromised insiders to be out of scope for ASL-3. We define “basic insider risk” as risk from an insider who does not have persistent or time-limited [...]

---

Outline:

(00:37) Anthropic is committed to being robust to attacks from corporate espionage teams (which includes corporate espionage teams at Google, Microsoft and Amazon)

(03:40) Claude weights that are covered by ASL-3 security requirements are shipped to many Amazon, Google, and Microsoft data centers

(04:55) This means given executive buy-in by a high-level Amazon, Microsoft or Google executive, their corporate espionage team would have virtually unlimited physical access to Claude inference machines that host copies of the weights

(05:36) With unlimited physical access, a competent corporate espionage team at Amazon, Microsoft or Google could extract weights from an inference machine, without too much difficulty

(06:18) Given all of the above, this means Anthropic is in violation of its most recent RSP

(07:05) Postscript

---

First published:

November 18th, 2025

Source:

https://www.lesswrong.com/posts/zumPKp3zPDGsppFcF/anthropic-is-probably-not-meeting-its-rsp-security

---

Narrated by TYPE III AUDIO.

---

…

continue reading

Anthropic is committed to being robust to attacks from corporate espionage teams (which includes corporate espionage teams at Google, Microsoft and Amazon)

From the Anthropic RSP:

When a model must meet the ASL-3 Security Standard, we will evaluate whether the measures we have implemented make us highly protected against most attackers’ attempts at stealing model weights.

We consider the following groups in scope: hacktivists, criminal hacker groups, organized cybercrime groups, terrorist organizations, corporate espionage teams, internal employees, and state-sponsored programs that use broad-based and non-targeted techniques (i.e., not novel attack chains).

[...]

We will implement robust controls to mitigate basic insider risk, but consider mitigating risks from sophisticated or state-compromised insiders to be out of scope for ASL-3. We define “basic insider risk” as risk from an insider who does not have persistent or time-limited [...]

---

Outline:

(00:37) Anthropic is committed to being robust to attacks from corporate espionage teams (which includes corporate espionage teams at Google, Microsoft and Amazon)

(03:40) Claude weights that are covered by ASL-3 security requirements are shipped to many Amazon, Google, and Microsoft data centers

(04:55) This means given executive buy-in by a high-level Amazon, Microsoft or Google executive, their corporate espionage team would have virtually unlimited physical access to Claude inference machines that host copies of the weights

(05:36) With unlimited physical access, a competent corporate espionage team at Amazon, Microsoft or Google could extract weights from an inference machine, without too much difficulty

(06:18) Given all of the above, this means Anthropic is in violation of its most recent RSP

(07:05) Postscript

---

First published:

November 18th, 2025

Source:

https://www.lesswrong.com/posts/zumPKp3zPDGsppFcF/anthropic-is-probably-not-meeting-its-rsp-security

---

Narrated by TYPE III AUDIO.

---

682 ตอน

All episodes

×ขอต้อนรับสู่ Player FM!

Player FM กำลังหาเว็บ